Michael Wimmer is currently an Associate Professor at the Institute of Computer Graphics and Algorithms of the Vienna University of Technology, where he heads the Rendering Group. His academic career started with his M.Sc. in 1997 at the Vienna Universtiy of Technology, where he obtained his Ph.D. in 2001. His research interests are real-time rendering, computer games, real-time visualization of urban environments, point-based rendering, procedural modeling and shape modeling. He has coauthored over 100 papers in these fields. He also coauthored the book Real-Time Shadows. He served on many program committees, including ACM SIGGRAPH and SIGGRAPH Asia, Eurographics, Eurographics Symposium on Rendering, ACM I3D, etc. He is currently associate editor of Computers & Graphics and TVCG. He was papers co-chair of EGSR 2008, Pacific Graphics 2012, and Eurographics 2015. His talk takes place on Tuesday, October 20, 1 pm in room A112.

Michael Wimmer is currently an Associate Professor at the Institute of Computer Graphics and Algorithms of the Vienna University of Technology, where he heads the Rendering Group. His academic career started with his M.Sc. in 1997 at the Vienna Universtiy of Technology, where he obtained his Ph.D. in 2001. His research interests are real-time rendering, computer games, real-time visualization of urban environments, point-based rendering, procedural modeling and shape modeling. He has coauthored over 100 papers in these fields. He also coauthored the book Real-Time Shadows. He served on many program committees, including ACM SIGGRAPH and SIGGRAPH Asia, Eurographics, Eurographics Symposium on Rendering, ACM I3D, etc. He is currently associate editor of Computers & Graphics and TVCG. He was papers co-chair of EGSR 2008, Pacific Graphics 2012, and Eurographics 2015. His talk takes place on Tuesday, October 20, 1 pm in room A112.

Computer Graphics Meets Computational Design

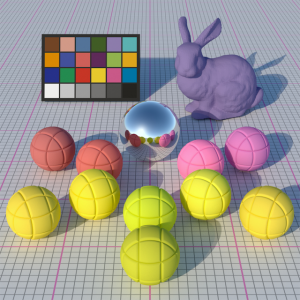

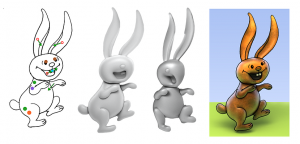

In this talk, I will report on recent advancements in Computer Graphics, which will be of great interest for next-generation computational design tools. I will present methods for modeling from images, modeling by examples and multiple examples, but also procedural modeling, modeling of physical behavior and light transport, all recently developed in our group. The common rationale behind our research is that we exploit real-time processing power and computer graphics algorithms to enable interactive computational design tools that allow short feedback loops in design processes.

i

i