Marc Delcroix is a senior research scientist at NTT Communication Science Laboratories, Kyoto, Japan. He received the M.Eng. degree from the Free University of Brussels, Brussels, Belgium, and the Ecole Centrale Paris, Paris, France, in 2003 and the Ph.D. degree from the Graduate School of Information Science and Technology, Hokkaido University, Sapporo, Japan, in 2007. His research interests include robust multi-microphone speech recognition, acoustic model adaptation, integration of speech enhancement front-end and recognition back-end, speech enhancement and speech dereverberation. He took an active part in the development of NTT robust speech recognition systems for the REVERB and the CHiME 1 and 3 challenges, that all achieved best performances on the tasks. He was one of the organizers of the REVERB challenge, 2014 and of ARU 2017. He is a visiting lecturer at the Faculty of Science and Engineering of Waseda University, Tokyo, Japan.

Marc Delcroix is a senior research scientist at NTT Communication Science Laboratories, Kyoto, Japan. He received the M.Eng. degree from the Free University of Brussels, Brussels, Belgium, and the Ecole Centrale Paris, Paris, France, in 2003 and the Ph.D. degree from the Graduate School of Information Science and Technology, Hokkaido University, Sapporo, Japan, in 2007. His research interests include robust multi-microphone speech recognition, acoustic model adaptation, integration of speech enhancement front-end and recognition back-end, speech enhancement and speech dereverberation. He took an active part in the development of NTT robust speech recognition systems for the REVERB and the CHiME 1 and 3 challenges, that all achieved best performances on the tasks. He was one of the organizers of the REVERB challenge, 2014 and of ARU 2017. He is a visiting lecturer at the Faculty of Science and Engineering of Waseda University, Tokyo, Japan.

Keisuke Kinoshita is a senior research scientist at NTT Communication Science Laboratories, Kyoto, Japan. He received the M.Eng. degree and the Ph.D degree from Sophia University in Tokyo, Japan in 2003 and 2010 respectively. He joined NTT in 2003 and since then has been working on speech and audio signal processing. His research interests include single- and multichannel speech enhancement and robust speech recognition. He was the Chief Coordinator of REVERB challenge 2014, an organizing committee member of ASRU-2017. He was honored to receive IEICE Paper Awards (2006), ASJ Technical Development Awards (2009), ASJ Awaya Young Researcher Award (2009), Japan Audio Society Award (2010), and Maeshima Hisoka Award (2017). He is a visiting lecturer at the Faculty of Science and Engineering of Doshisha University, Tokyo, Japan.

Keisuke Kinoshita is a senior research scientist at NTT Communication Science Laboratories, Kyoto, Japan. He received the M.Eng. degree and the Ph.D degree from Sophia University in Tokyo, Japan in 2003 and 2010 respectively. He joined NTT in 2003 and since then has been working on speech and audio signal processing. His research interests include single- and multichannel speech enhancement and robust speech recognition. He was the Chief Coordinator of REVERB challenge 2014, an organizing committee member of ASRU-2017. He was honored to receive IEICE Paper Awards (2006), ASJ Technical Development Awards (2009), ASJ Awaya Young Researcher Award (2009), Japan Audio Society Award (2010), and Maeshima Hisoka Award (2017). He is a visiting lecturer at the Faculty of Science and Engineering of Doshisha University, Tokyo, Japan.

Their talk takes place on Monday, August 28, 2017 at 13:00 in room A112.

NTT far-field speech processing research

The success of voice search applications and voice controlled device such as the Amazon echo confirms that speech is becoming a common modality to access information. Despite great recent progress in the field, it is still challenging to achieve high automatic speech recognition (ASR) performance when using microphone distant from the speakers (Far-field), because of noise, reverberation and potential interfering speakers. It is even more challenging when the target speech consists of spontaneous conversations.

At NTT, we are pursuing research on far-field speech recognition focusing on speech enhancement front-end and robust ASR back-ends towards building next generation ASR systems able to understand natural conversations. Our research achievements have been combined into ASR systems we developed for the REVERB and CHiME 3 challenges, and for meeting recognition.

In this talk, after giving a brief overview of the research activity of our group, we will introduce in more detail two of our recent research achievements. First, we will present our work on speech dereverberation using weighted prediction error (WPE) algorithm. We have recently proposed an extension to WPE to integrate deep neural network based speech modeling into the WPE framework, and demonstrate further potential performance gains for reverberant speech recognition.

Next, we will discuss our recent work on acoustic model adaptation to create ASR back-ends robust to speaker and environment variations. We have recently proposed a context adaptive neural network architecture, which is a powerful way to exploit speaker or environment information to perform rapid acoustic model adaptation.

Tomáš Mikolov is a research scientist at Facebook AI Research since 2014. Previously he has been a member of Google Brain team, where he developed efficient algorithms for computing distributed representations of words (word2vec project). He has obtained PhD from Brno University of Technology for work on recurrent neural network based language models (RNNLM). His long term research goal is to develop intelligent machines capable of communicating with people using natural language. His talk will take place on Tuesday, January 3rd, 2017, 5pm in room

Tomáš Mikolov is a research scientist at Facebook AI Research since 2014. Previously he has been a member of Google Brain team, where he developed efficient algorithms for computing distributed representations of words (word2vec project). He has obtained PhD from Brno University of Technology for work on recurrent neural network based language models (RNNLM). His long term research goal is to develop intelligent machines capable of communicating with people using natural language. His talk will take place on Tuesday, January 3rd, 2017, 5pm in room

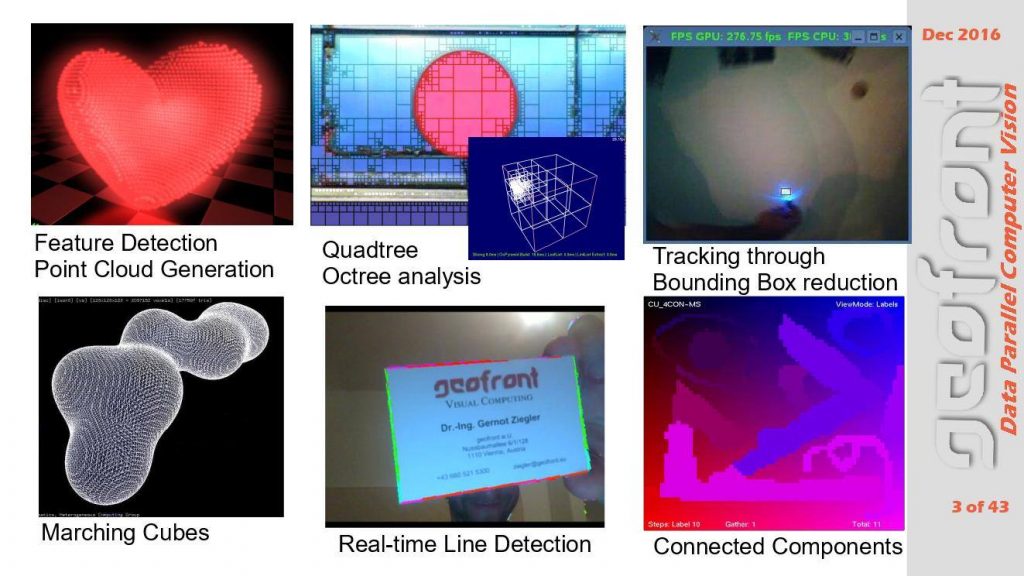

Elmar Eisemann

Elmar Eisemann

Petr Kubánek received master degree in Software engineering from the Faculty of Mathematics and Physics of Charles University in Prague, and master degree in fuzzy logic from University of Granada in Spain. Currently he is research fellow at the Institute of Physics of Czech Academy of Sciences in Prague. He is developing RTS2 (Remote Telescope System 2nd Version), a package for fully autonomous astronomical observatory control and scheduling. RTS2 is being used on multiple observatories around the planet, on all continents (as one of the RTS2 collaborator is currently winterovering at Dome C in Antartica). Petr’s interests and expertises spans from distributed device control through databases towards image processing and data mining. During his carrier, he collaborated with top world institutions (Harvard/CfA on FLWO 48″ telescope, UC Berkeley on RATIR 1.5m telescope, NASA/IfA on ATLAS project, ESA/ISDEFE on TBT project, SLAC and BNL on Large Synoptics Survey Telescope (LSST) CCD testing) and enjoyed travel to restricted areas (scheduled for observing run at US Naval Observatory in Arizona). Hi is on kind-of parental leave, enjoying his new family, and slowly returning back to vivid astronomical world. His talk takes place on Tuesday, December 8, 2pm in room

Petr Kubánek received master degree in Software engineering from the Faculty of Mathematics and Physics of Charles University in Prague, and master degree in fuzzy logic from University of Granada in Spain. Currently he is research fellow at the Institute of Physics of Czech Academy of Sciences in Prague. He is developing RTS2 (Remote Telescope System 2nd Version), a package for fully autonomous astronomical observatory control and scheduling. RTS2 is being used on multiple observatories around the planet, on all continents (as one of the RTS2 collaborator is currently winterovering at Dome C in Antartica). Petr’s interests and expertises spans from distributed device control through databases towards image processing and data mining. During his carrier, he collaborated with top world institutions (Harvard/CfA on FLWO 48″ telescope, UC Berkeley on RATIR 1.5m telescope, NASA/IfA on ATLAS project, ESA/ISDEFE on TBT project, SLAC and BNL on Large Synoptics Survey Telescope (LSST) CCD testing) and enjoyed travel to restricted areas (scheduled for observing run at US Naval Observatory in Arizona). Hi is on kind-of parental leave, enjoying his new family, and slowly returning back to vivid astronomical world. His talk takes place on Tuesday, December 8, 2pm in room