S. Umesh is a professor in the Department of Electrical Engineering at Indian Institute of Technology – Madras. His research interests are mainly in automatic speech recognition particularly in low-resource modelling and speaker normalization & adaptation. He has also been a visiting researcher at AT&T Laboratories, Cambridge University and RWTH-Aachen under the Humboldt Fellowship. He is currently leading a consortium of 12 Indian institutions to develop speech based systems in agricultural domain. His talk takes place on Tuesday, June 27, 2017 at 13:00 in room A112.

S. Umesh is a professor in the Department of Electrical Engineering at Indian Institute of Technology – Madras. His research interests are mainly in automatic speech recognition particularly in low-resource modelling and speaker normalization & adaptation. He has also been a visiting researcher at AT&T Laboratories, Cambridge University and RWTH-Aachen under the Humboldt Fellowship. He is currently leading a consortium of 12 Indian institutions to develop speech based systems in agricultural domain. His talk takes place on Tuesday, June 27, 2017 at 13:00 in room A112.

Acoustic Modelling of low-resource Indian languages

In this talk, I will present recent efforts in India to build speech-based systems in agriculture domain to provide easy access to information to about 600 million farmers. This is being developed by a consortium of 12 Indian institutions initially in 12 languages, which will then be expanded to another 12 languages. Since the usage is in extremely noisy environments such as fields, the emphasis is on high accuracy by using directed queries which elicit short phrase-like responses. Within this framework, we explored cross-lingual and multilingual acoustic modelling techniques using subspace-GMMs and phone-CAT approaches. We also extended the use of phone-CAT for phone-mapping and articulatory features extraction which were then fed to a DNN based acoustic model. Further, we explored the joint estimation of acoustic model (DNN) and articulatory feature extractors. These approaches gave significant improvement in recognition performance, when compared to building systems using data from only one language. Finally, since the speech consisted of mostly short and noisy utterances, conventional adaptation and speaker-normalization approaches could not be easily used. We investigated the use of a neural network to map filter-bank features to fMLLR/VTLN features, so that the normalization can be done at frame-level without first-pass decode, or the necessity of long utterances to estimate the transforms. Alternately, we used a teacher-student framework where the teacher trained on normalized features is used to provide “soft targets” to the student network trained on un-normalized features. In both approaches, we obtained recognition performance that is better than ivector-based normalization schemes.

Tomáš Mikolov is a research scientist at Facebook AI Research since 2014. Previously he has been a member of Google Brain team, where he developed efficient algorithms for computing distributed representations of words (word2vec project). He has obtained PhD from Brno University of Technology for work on recurrent neural network based language models (RNNLM). His long term research goal is to develop intelligent machines capable of communicating with people using natural language. His talk will take place on Tuesday, January 3rd, 2017, 5pm in room

Tomáš Mikolov is a research scientist at Facebook AI Research since 2014. Previously he has been a member of Google Brain team, where he developed efficient algorithms for computing distributed representations of words (word2vec project). He has obtained PhD from Brno University of Technology for work on recurrent neural network based language models (RNNLM). His long term research goal is to develop intelligent machines capable of communicating with people using natural language. His talk will take place on Tuesday, January 3rd, 2017, 5pm in room

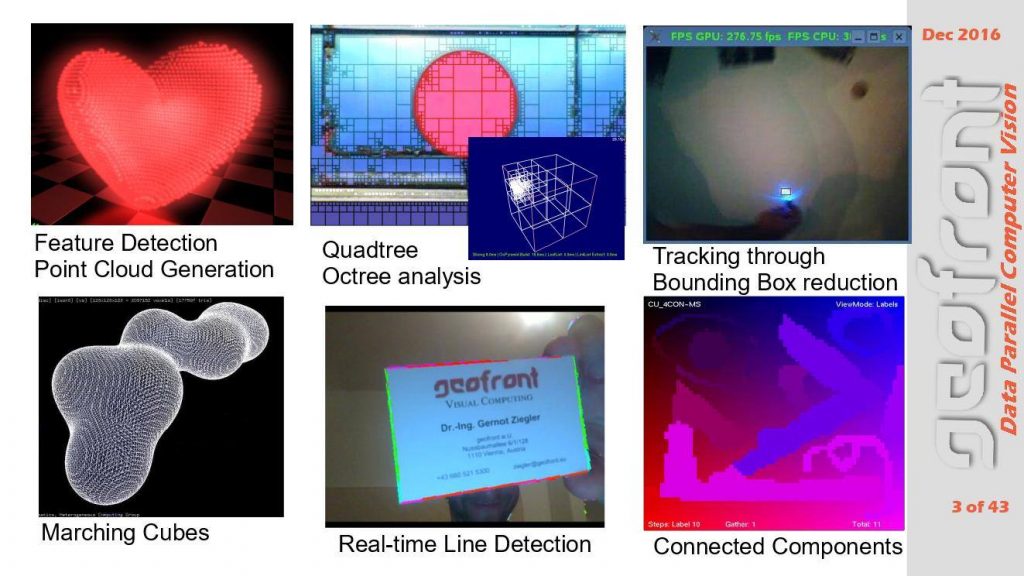

Elmar Eisemann

Elmar Eisemann

Petr Kubánek received master degree in Software engineering from the Faculty of Mathematics and Physics of Charles University in Prague, and master degree in fuzzy logic from University of Granada in Spain. Currently he is research fellow at the Institute of Physics of Czech Academy of Sciences in Prague. He is developing RTS2 (Remote Telescope System 2nd Version), a package for fully autonomous astronomical observatory control and scheduling. RTS2 is being used on multiple observatories around the planet, on all continents (as one of the RTS2 collaborator is currently winterovering at Dome C in Antartica). Petr’s interests and expertises spans from distributed device control through databases towards image processing and data mining. During his carrier, he collaborated with top world institutions (Harvard/CfA on FLWO 48″ telescope, UC Berkeley on RATIR 1.5m telescope, NASA/IfA on ATLAS project, ESA/ISDEFE on TBT project, SLAC and BNL on Large Synoptics Survey Telescope (LSST) CCD testing) and enjoyed travel to restricted areas (scheduled for observing run at US Naval Observatory in Arizona). Hi is on kind-of parental leave, enjoying his new family, and slowly returning back to vivid astronomical world. His talk takes place on Tuesday, December 8, 2pm in room

Petr Kubánek received master degree in Software engineering from the Faculty of Mathematics and Physics of Charles University in Prague, and master degree in fuzzy logic from University of Granada in Spain. Currently he is research fellow at the Institute of Physics of Czech Academy of Sciences in Prague. He is developing RTS2 (Remote Telescope System 2nd Version), a package for fully autonomous astronomical observatory control and scheduling. RTS2 is being used on multiple observatories around the planet, on all continents (as one of the RTS2 collaborator is currently winterovering at Dome C in Antartica). Petr’s interests and expertises spans from distributed device control through databases towards image processing and data mining. During his carrier, he collaborated with top world institutions (Harvard/CfA on FLWO 48″ telescope, UC Berkeley on RATIR 1.5m telescope, NASA/IfA on ATLAS project, ESA/ISDEFE on TBT project, SLAC and BNL on Large Synoptics Survey Telescope (LSST) CCD testing) and enjoyed travel to restricted areas (scheduled for observing run at US Naval Observatory in Arizona). Hi is on kind-of parental leave, enjoying his new family, and slowly returning back to vivid astronomical world. His talk takes place on Tuesday, December 8, 2pm in room